Willkommen!

Willkommen auf der Webseite des Fachgebiets Nachrichtenübertragung an der Technischen Universität Berlin. Unser Fachgebiet ist im Jahr 2002 aus dem Fachgebiet Fernmeldetechnik von Prof. Peter Noll hervorgegangen, und ist Teil des Instituts für Telekommunikationssysteme der Fakultät IV. Die Leitung des Fachgebietes obliegt Prof. Thomas Sikora.

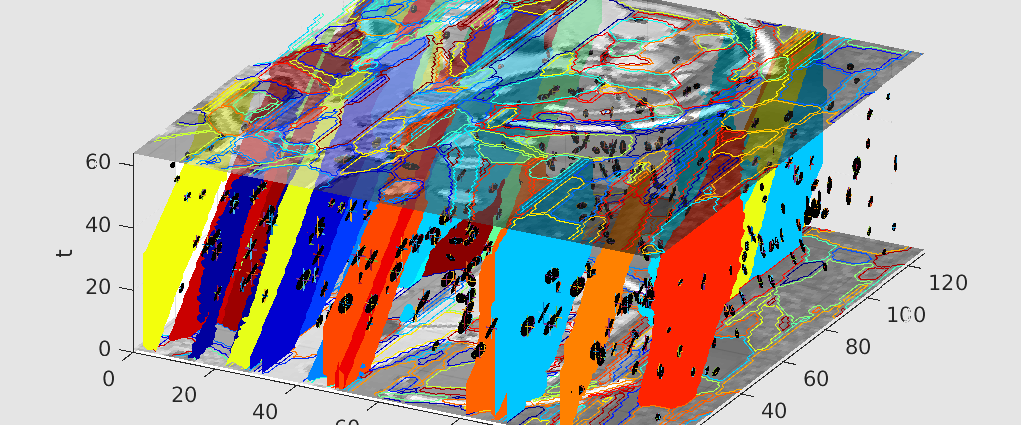

Lehre und Forschung am Fachgebiet umfassen alle wesentlichen Bereiche der digitalen Signalverarbeitung für Multimedia-Anwendungen. Schwerpunkte sind insbesondere die Analyse, Beschreibung, Segmentierung, Klassifikation sowie die Codierung von Sprach-, Audio- und Videodaten.

© NUe

© NUe

© copy MICHEL HOUET LIEGE BELGIUM

© copy MICHEL HOUET LIEGE BELGIUM